Blogpost written by Fynn Wojke and Peter Khalil

Key points:

- When online services are not working as intended, AI chatbots that seem friendly and charming appear to lower customer frustrations.

- As customer service shifts increasingly to the digital world, chatbots that react with a sense of empathy and playfulness may be key to maintain good customer relations.

Customer Service in a Digital World

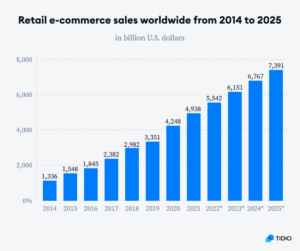

These days, there’s a noticeable trend of filling online shopping carts more often than physical ones, as visualized in Figure 1. Therefore, it’s now more crucial than ever for companies to prioritize efficient customer support.

Imagine a package you were waiting for didn’t show up on time, and all you got from the service center was a cold, automated ‘sorry’? Now, picture a chatbot that can actually make you smile about the inconvenience with a playful joke or a cute message. This works not solely as a clever distraction from the inconvenience, but also builds a bridge to the ‘human’ in customer service.

This blogpost is about making artificially intelligent (AI) chatbots ‘act cute.’ At first, this may sound simple, but is based on deep knowledge about how humans respond to cuteness. Apparently, a new study published in 2022 investigates whether chatbots that use playful jokes or cuteness — like the kind we see in babies — can help calm customers down after something goes wrong, like a late delivery.

The Role of a Chatbot’s Behavior

The study’s method is as interesting as the idea itself. They put several people in the situation where an online service went wrong, something we all find annoying.

Then, the researchers asked people to reach out to different chatbots with their problems in a controlled setting: some chatbots had playful and joking characteristics, some chatbots were ‘cute’, and others acted nothing special. ‘Acting-cute’ here means using playful humor and charm on purpose to make customers happy, especially when they’re having problems with a service. By observing different reactions to varied chatbot behavior, the researchers could observe how these different behaviors from the chatbots affected people’s moods.

Unveiling the Findings: Behavior Really Matters!

Results were apparent: chatbots that ‘act-cute’ help to ease customer irritation.

To act cute, chatbots adapted two different behaviors, namely ‘whimsical’ and the ‘kindchenschema’. Both strategies work by manipulating the customer’s emotional state through psychological mechanisms rooted in human instincts. So, they aim at redirecting the customer’s focus away from their negative feeling towards the service failure. While whimsical behavior counts on the human desire for entertainment and distraction, the effectiveness of the kindchenschema is rooted in the instinct to nurture and protect.

However, researchers have revealed that the success of this approach depends on the specific situation. For more severe problems or if the customer was not comfortable with technology, chatbots that went for a playful, humorous approach (whimsical) were more effective, especially with men. Chatbots that seemed childlike (kindchenschema), worked better for people who were more at ease with technology and were in general more successful with women.

A Call to Action for Emotionally Intelligent AI

The findings show that personalizing customer service is the route to take.

While a ‘cute’-chatbot may be just right for some people, others may suit different behaviors. Therefore, knowing their customers will be crucial for any online-service provider in the near future. We believe that, as different behavioral strategies can help businesses turn bad customer experiences into good ones, the application of ‘acting-cute’-AI might set a new standard in terms of customer happiness. Thus, we argue that the use of AI will soon not only solve problems but also serves to understand us, empathize with us, and enhance overall customer relation.

While the findings seem cohesive, there is still room for discussion. Given the differences in how men and women respond to different chatbot personalities, we question which other demographic or cultural factors need to be considered by a company when implementing an AI strategy regarding customer service.

As AI is constantly improving nowadays, even able to be indistinguishable from humans in certain tasks, ethical questions arise. We wonder where policy makers will draw the line in a machine’s emotional intelligence, and which ethical considerations would come into play if there will be no restrictions.

References

Zhang, T., Feng, C., Chen, H. et al. Calming the customers by AI: Investigating the role of chatbot acting-cute strategies in soothing negative customer emotions. Electron Markets 32, 2277–2292 (2022). https://doi.org/10.1007/s12525-022-00596-2